Creatity Services

Creatity services

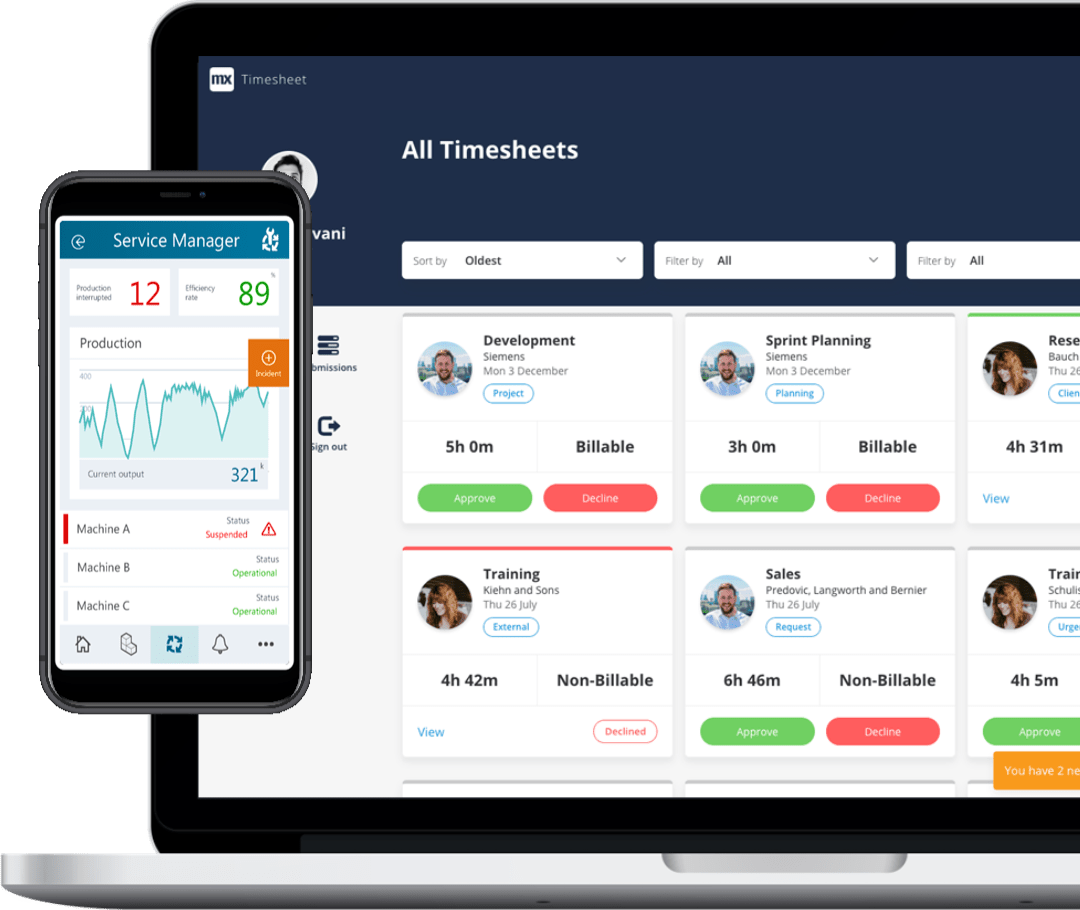

Mendix low-code

software development

Utilize Mendix to revolutionize your company’s digital landscape. We have extensive experience in developing a wide array of solutions for some of the largest enterprises. What can Creatity do for you?

- Evaluate the suitability of Mendix within your organization.

- POC in days, MVP in weeks, full-fledged applications in weeks or months.

- Assist you throughout the entire Mendix platform roll-out journey.

- Develop customized low-code solutions using Mendix.

- Offer project-based collaboration or long-term advisory and partnership.

- Provide guidance during the digital transformation process.

- Create native mobile, tablet, and web applications.

- Expertise in IoT, IIoT, AI, and AR/VR technologies.

- Replace legacy technologies with modern solutions.

- Handle deployment and management of Mendix applications across various cloud environments, including public, private, or on-premise.

- Develop a portfolio of applications with robust governance, utilizing REST and microservices architecture.

Let Creatity company be your partner in making smart business decisions, establishing measurable targets, and achieving them together.

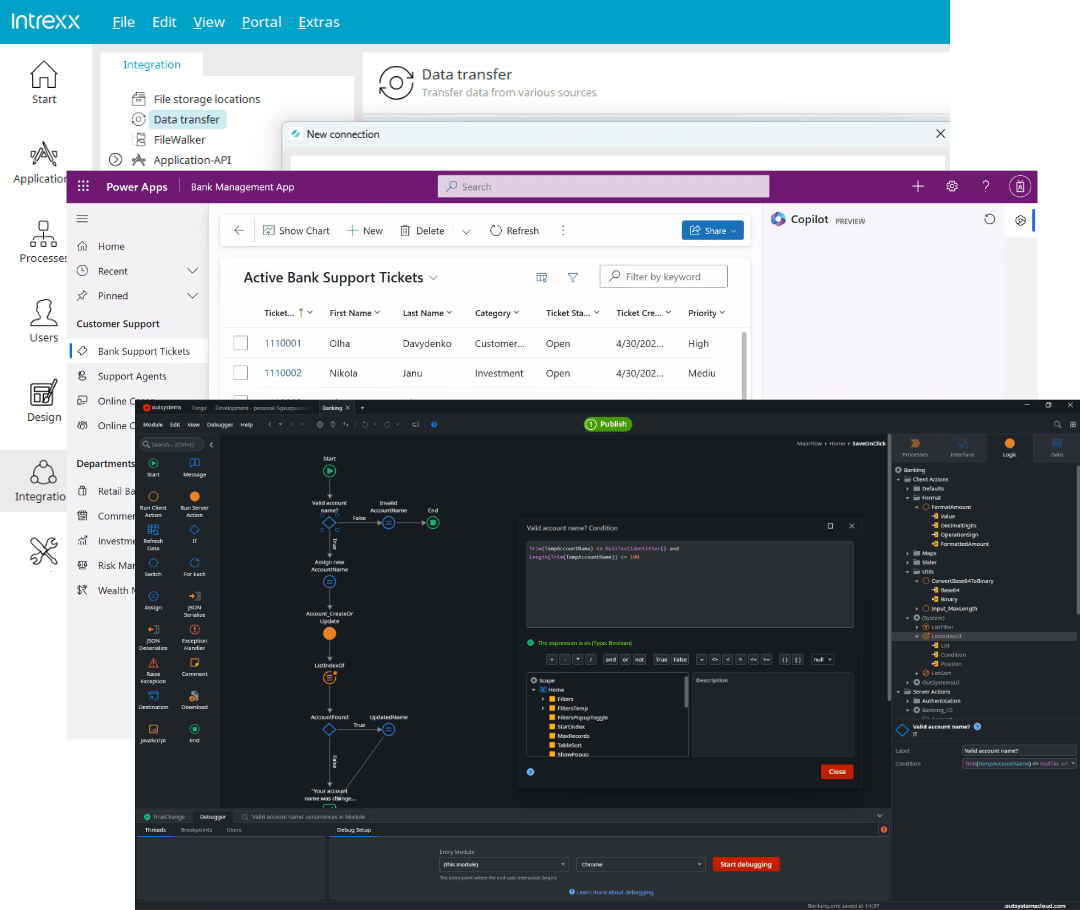

Low-code software development in OutSystems, Power Apps, Intrexx

Experience the accelerated pace of application development, several times faster than traditional coding, through a visual building approach using pre-prepared components. Discover the robust security features integrated into low-code platforms. How can Creatity help you?

- Development in OutSystems, Mendix, Power Apps, Intrexx and other low-code platforms.

- We will guide you through the assesment and implementation of low-code in your company.

- We will scale and adapt the applications at breakneck speed to ensure your business needs are always met.

- Projects can be hosted on public/private cloud or on-premise, with support for AWS, Azure, Docker, Kubernetes, and many other technologies.

- Creatity provides training for your talented employees to develop and maintain low-code applications.

With low-code, businesses can effortlessly articulate their needs and have them swiftly addressed by developers.

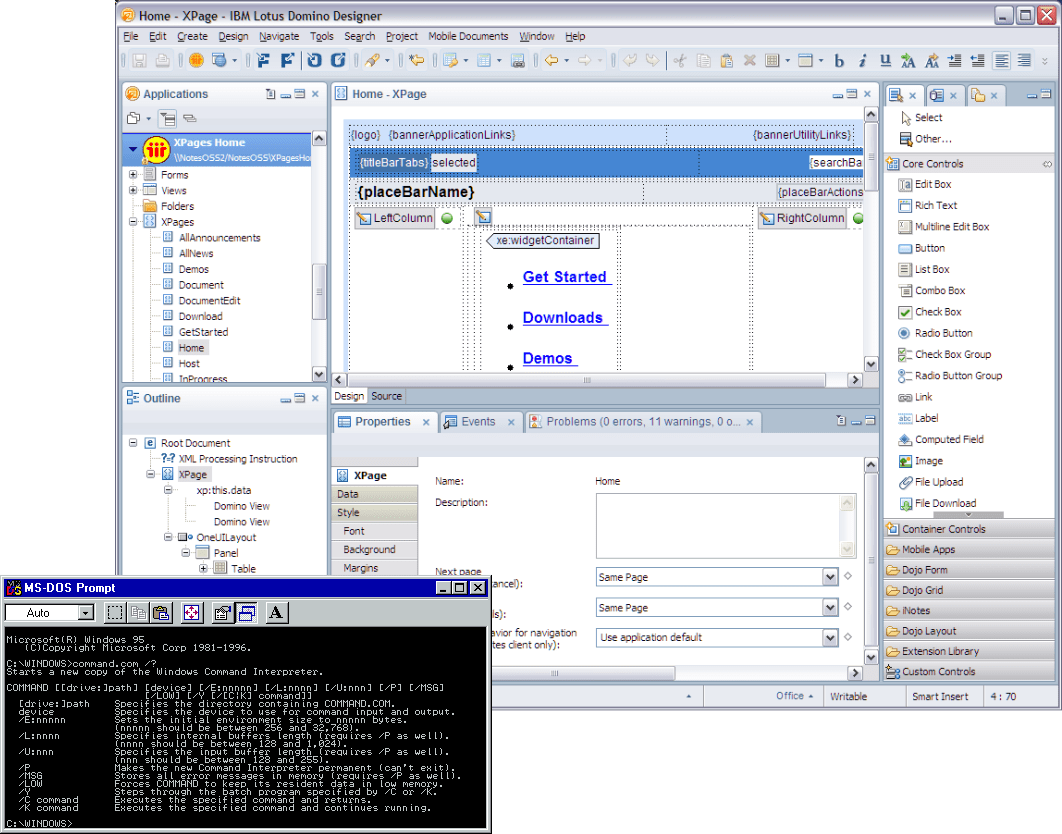

Legacy technology

migration

Maintaining outdated technologies can be costly and problematic, often leading to stability and security issues. Modernizing your systems can reduce costs, enhance stability, and secure your data, ultimately improving overall efficiency and saving money in the long term. Legacy technologies we can gradually migrate or replace include:

- Database and application development platforms like Lotus Notes or Microsoft Access.

- Custom in-house applications built with outdated languages (like Visual Basic, Delphi).

- Paper-based processes.

- Desktop applications (built using languages like C++, Java, C#).

- Old custom CRM and ERP systems.

- Custom BI dashboards.

- Complex integrations relying on legacy middleware solutions.

- Outdated inventory management systems.

- Spreadsheets (like Excel, Google Documents) used for complex business logic or data manipulation.

Witness firsthand the speed of development, scalability, and robustness of modern low-code software development.

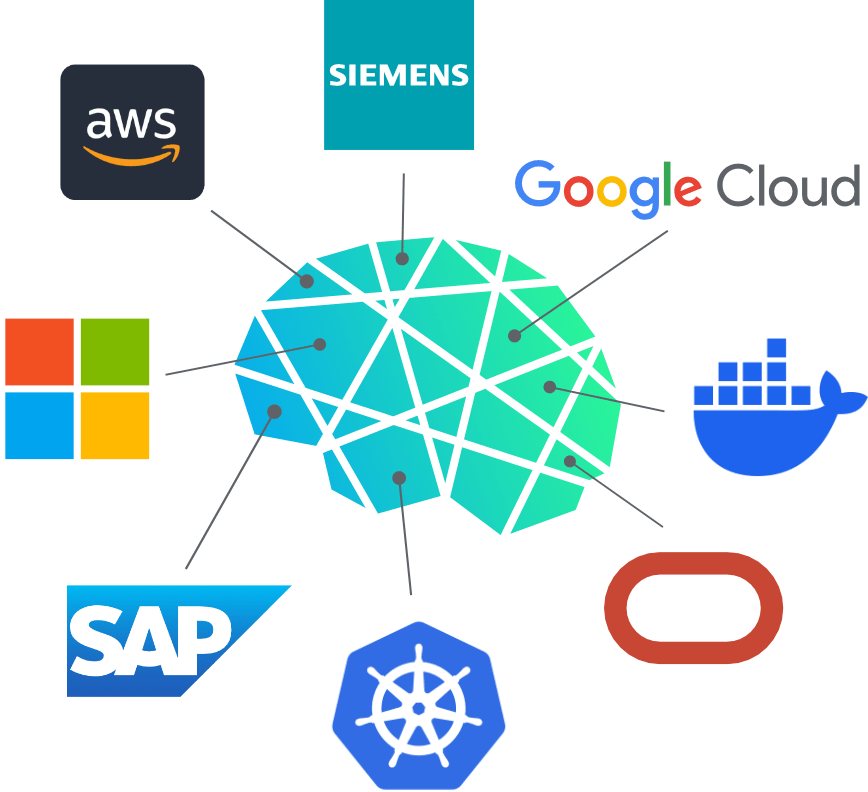

System integration & cloud solutions

Explore the synergy of system integration and cloud solutions with Creatity. Rely on us to handle your software management needs while connecting various systems, ensuring smooth and efficient operations. Creatity offers:

- Deployment of your low-code applications in:

- Public cloud

- Private cloud

- On-premises

- Seamless integration with leading cloud platforms such as AWS, Azure, Google Cloud, SAP, VMware, and others.

- Leverage Docker and Kubernetes for containerization and orchestration of your applications.

- Integrate smoothly with SAP, PLM (product lifecycle management) systems like Siemens Teamcenter, APS (advanced planning and scheduling) software, Microsoft Dynamics 365, and many other enterprise systems.

- Consume and expose REST APIs, SOAP web services, and OData.

Partner with Creatity to seamlessly connect your technologies, unlocking new possibilities for efficiency and innovation in your organization.

Consultation & analysis

services

Are you considering the benefits of low-code software development but unsure where to start? Our team of experienced low-code experts is here to guide you through every step of the process.

- Tailored Solutions: Collaborate with our experts to determine if low-code is the right fit for your organization and which platform will best align with your business objectives.

- Fast Roll-out: We will help you quickly select the appropriate components, rapidly roll-out the platform, and establish application governance.

- Personalized Guidance: Benefit from in-depth assessments of your current systems and processes to uncover opportunities for improvement and optimization using low-code solutions.

- Test the Waters: Explore the potential of low-code through proof of concept (POC) delivered in just days.

With a wealth of experience in low-code development across various industries, Creatity brings unparalleled expertise to every project. We’re committed to partnering with you every step of the way, from initial consultation to ongoing support, to ensure your success.

Training,

support & expertise

At Creatity, we understand that adopting low-code solutions is just the beginning of your digital transformation journey. That’s why we offer comprehensive training and ongoing support to ensure your success every step of the way. We offer:

- Tailored Training: Our team of low-code experts provides personalized training programs tailored to your organization’s needs, empowering your team to harness the full potential of Mendix, OutSystems, Power Apps, Intrexx and other low-code platforms.

- Dedicated Support: From implementation to optimization, our dedicated support team is here to provide guidance, troubleshooting, and best practices to keep your projects on track and your team confident.

- Instructor-led Training: Mendix Citizen Developer, Mendix Crash Course, Mendix Rapid, Mendix Intermediate, Mendix Advanced, Mendix Agile, Mendix for DevOps and many more courses also for other low-code platforms. Both online and on-site.

Ready to Elevate Your Low-Code Journey? Empower your team with the training, support, and expertise they need to succeed.